Optical metrology systems play a crucial role in various industries, enabling accurate and reliable measurements for quality control, inspection, and manufacturing processes. To ensure precise and consistent results, it is essential to calibrate these systems meticulously. By understanding the significance of calibration, considering key factors, and implementing regular calibration practices, organizations can optimise the performance of these systems. Accurate measurements obtained through properly calibrated optical metrology systems empower industries to maintain quality control, enhance efficiency, and drive continuous improvement.

How to calibrate your industrial vision system.

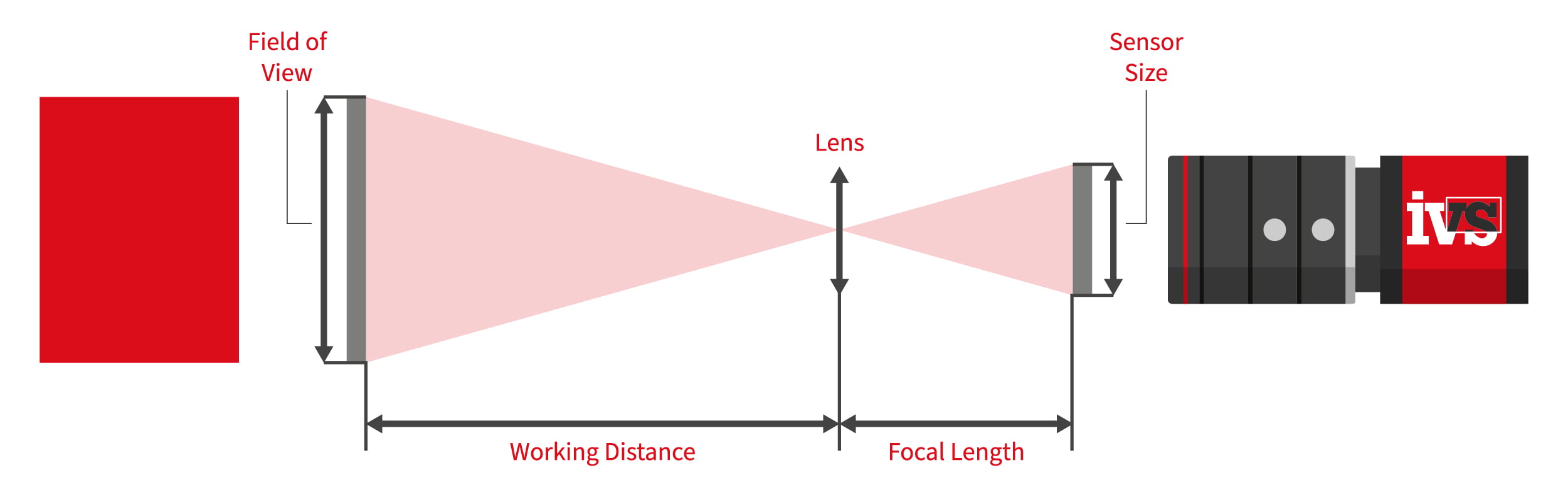

We’re often asked about the process for carrying out calibration on our automated vision inspection machines. After all, vision systems are based on pixels whose size is arbitrarily dependent on the sensor resolutions, fields-of-view and optical quality. It’s important that any measurements are validated and confirmed from shift-to-shift, day-to-day. Many vision inspection machines are replacing stand-alone slow probing metrology-based systems, or if it’s an in-line system, it will be performing multiple measurements on a device at high speed. Automating metrology measurement helps reduce cycle time and boost productivity in medical device manufacturing; therefore, accuracy and repeatability are critical.

In the realm of optical metrology, the utilisation of machine vision technology has brought about a transformative shift. However, to ensure that the results of the vision equipment have a meaning that everyone understands, the automated checks must be calibrated against recognised standards, facilitating compliance with industry regulations, certifications, and customer requirements.

The foundational science that instils confidence in the interpretation and accuracy of measurements is known as metrology. It encompasses all aspects of measurement, from meticulous planning and execution to meticulous record-keeping and evaluation, along with the computation of measurement uncertainties. The objectives of metrology extend beyond the mere execution of measurements; they encompass the establishment and maintenance of measurement standards, the development of innovative and efficient techniques, and the promotion of widespread recognition and acceptance of measurements.

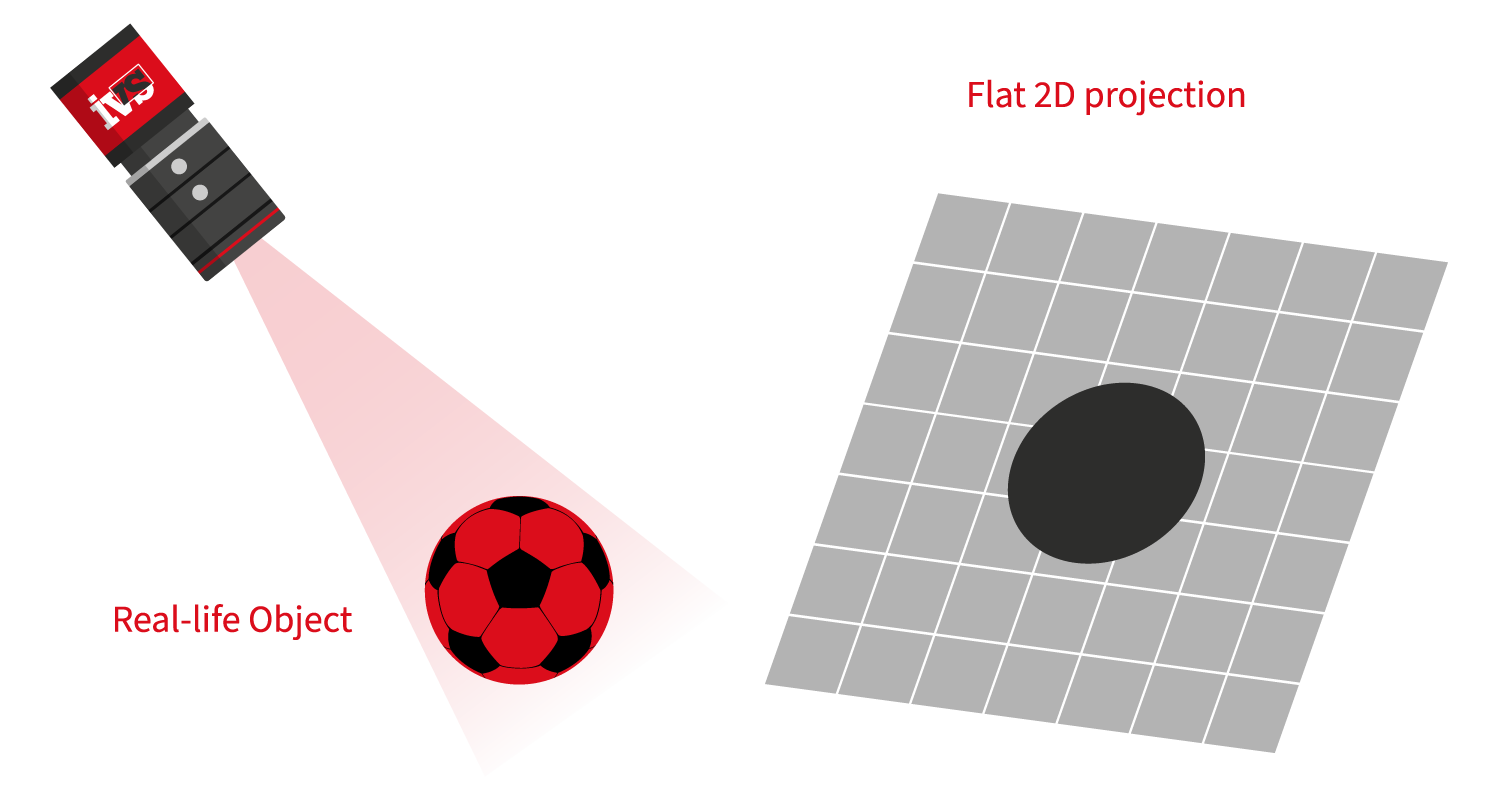

For metrology measurements, we need a correlation between the pixels and the real-world environment. For those of you who want the technical detail, we’re going to dive down into the basis for the creation of individual pixels and how the base pixel data is created. In reality, the users of our vision systems need not know this level of technical detail, as the main aspect is the calibration process itself – so you can skip the next few paragraphs if you want to!

The camera sensor is split into individual pixels in machine vision. Each pixel represents a tiny light-sensitive region that, when exposed to light, creates an electric charge. When an image is acquired, the vision system captures the amount of charge produced at each pixel position and stores it in a memory array. A protocol is used to produce a uniform reference system for pixel positions. The top left corner of the picture is regarded the origin, and a coordinate system with the X-axis running horizontally across the rows of the sensor and the Y-axis running vertically down the columns is utilised.

The pixel positions inside the image may be described using the X and Y coordinates in this coordinate system. For example, the top left pixel is called (0,0), the pixel to its right is called (1,0), the pixel below it is called (0,1), and so on. This convention enables the image’s pixels to be referred to in a consistent and standardised manner. So this method provides a way (in combination with sub-pixel processing) to provide a standard reference coordinate position for a set of pixels combined to create an edge, feature or “blob”.

In machine vision, metrology calibration involves the mapping of the pixel coordinates of the vision system sensor back to real-world coordinates. This “mapping” ties the distances measured back to the real-world measurements, i.e., back to millimetres, microns or other defined measurement system. In the absence of a calibration, any edges, lines or points measured would all be relative to pixel measurements only. But quality engineers need real, tangible measurements to validate the production process – so all systems must be pre-calibrated to known measurements.

The process of calibration ensures traceability of measurements to recognised standards, facilitating compliance with industry regulations, certifications, and customer requirements. It enhances the credibility and trustworthiness of measurement data.

How do you calibrate the vision system in real-world applications?

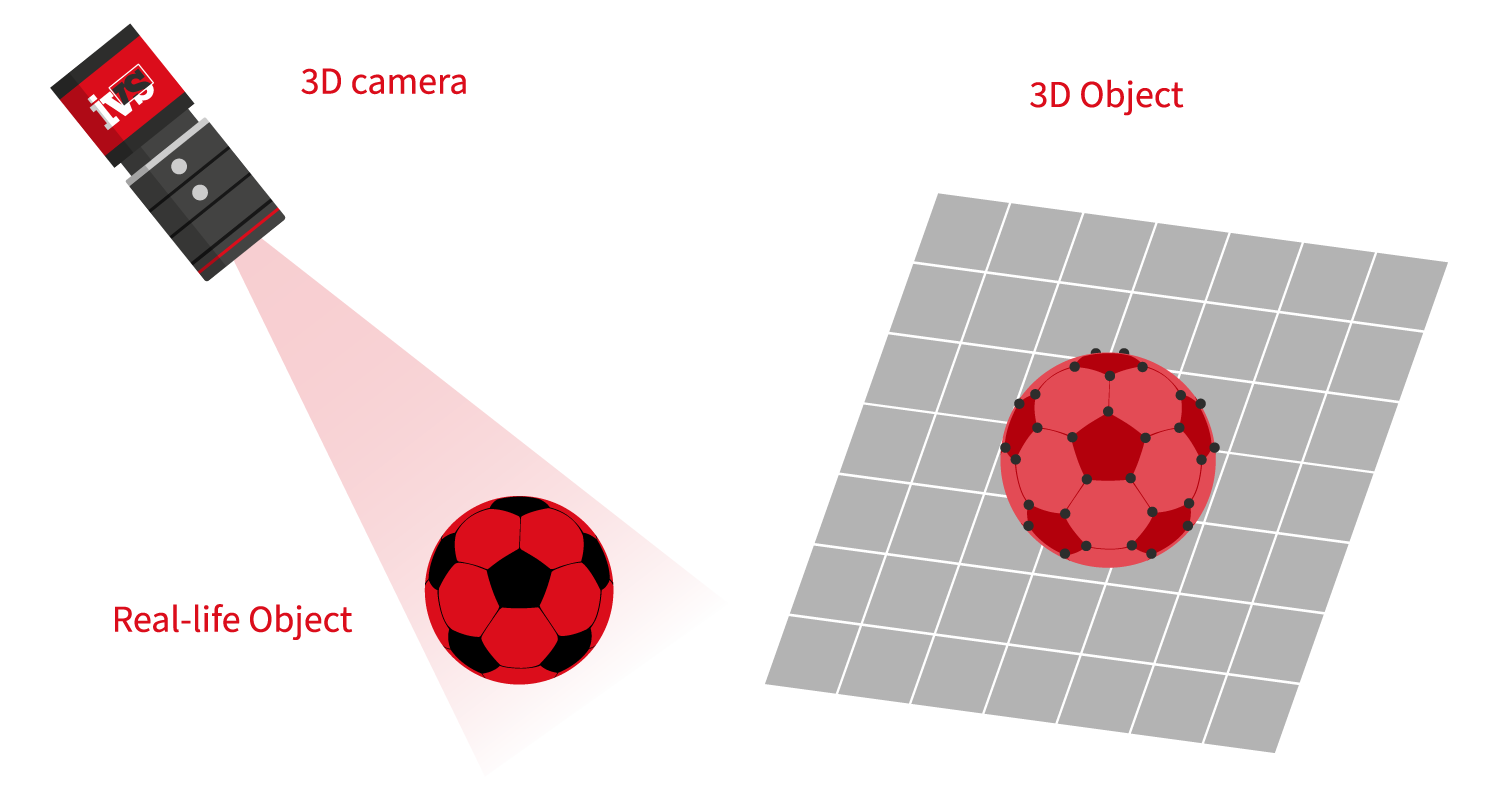

Utilising certified calibration artifacts or reference objects with known dimensions and properties is essential. These standards serve as benchmarks for system calibration, enabling the establishment of accurate measurement references. Proper calibration guarantees that measurements are free from systematic errors, ensuring the reliability and consistency of the data collected. The method of calibration will depend on if the system is a 2D or 3D vision system.

For a 2D vision system, common practice is to use slides with scales carved or printed on them, referred to as micrometre slides, stage graticules, and calibration slides. There is a diverse range of sizes, subdivision accuracy, and patterns of the scales. These slides are used to measure the calibration of vision systems. These calibration pieces are traceable back to national standards, which is key to calibrating the vision inspection machine effectively. A machined piece can also be used with traceability certification, this is convenient when you need a specific measurement to calibrate from for your vision metrology inspection.

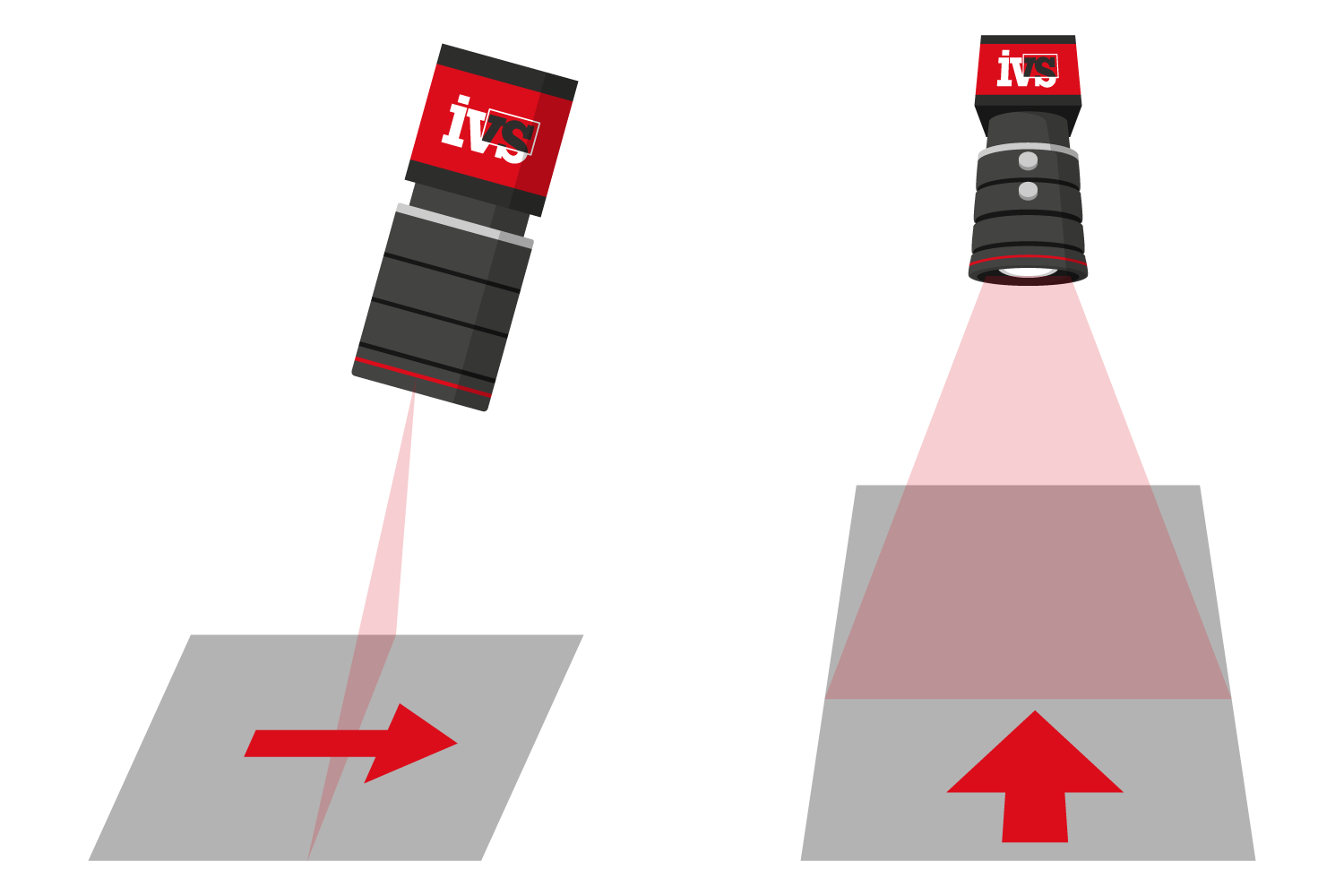

One form of optical distortion is called linear perspective distortion. This type of distortion occurs when the optical axis is not perpendicular to the object being imaged. A chart can be used with a pre-defined pattern to compensate for this distortion through software. The calibration system does not compensate for spherical distortions and aberrations introduced by the lens, so this is something to be aware of.

For 3D vision, you no longer need pricey bespoke artefacts or specifically prepared calibration sheets. Either the sensor is factory calibrated or a single round item in the shape of a sphere suffices. Calibrate and synchronise the vision sensor by sending calibrated component measurements to the IVS machine. You will instantly receive visible feedback that you may check and assess during the calibration process.

How often do I need to re-calibrate a vision system?

We often get asked this question, since optimal performance and accuracy of optical metrology systems can diminish over time due to factors like wear and tear or component degradation. Establishing a regular calibration schedule ensures consistent and reliable measurements. However, it’s often down to the application requirements, and the customer needs based on their validation procedures. This can take place once a shift, every two weeks, one a month or even once a year.

One final aspect is storage, all our vision inspection machines come with calibration storage built into the machine itself. It’s important to store the calibration piece carefully, use it as determined from the validation process, and keep it clean and free from dirt.

Overall, calibration is often automatic, and the user need never know this level of detail on how the calibration procedure operates. But it’s useful to have an understanding of the pixel to real-world data and to know that all systems are calibrated back to traceable national standards.