In this post we’re going to drill down on robotic vision systems and how in the context of industrial automation they can help you boost productivity, increase yield and decrease quality concerns in your manufacturing process.

The question of how you can increase productivity in manufacturing is an ongoing debate, especially in the UK where productivity has been dampened over the last years compared to other industrialised nations. The key to unlocking and kickstarting productivity in manufacturing (output per hour worked) is the increased use of automation, to produce more, with less labour overhead. This is in the form of general industrial automation and robotic vision systems, by combining these two disciplines manufacturers can boost productivity and become less reliant on transient labour and a temporary workforce who requires continuous training and replacement.

So where do you start? Well, a thorough review of the current manufacturing process is a good place to begin. Where are the bottlenecks in flow? What tasks are labour-intensive? Where do processes slow down and start up again? Are there processes where temporary labour might not build to your required quality level? What processes require a lot of training? Which stations could easily lend themselves to automating?

When manufacturers deploy robot production cells, there can be a worry that you are losing the human element in the manufacturing process. How will the robot spot a defect when no human is involved in the production process now? We always have the quality supervisor and his team checking our quality while they built it, who’s going to do it now? The robot will never see what they did. And they mark a tally chart of the failures, we’re going to lose all our data!

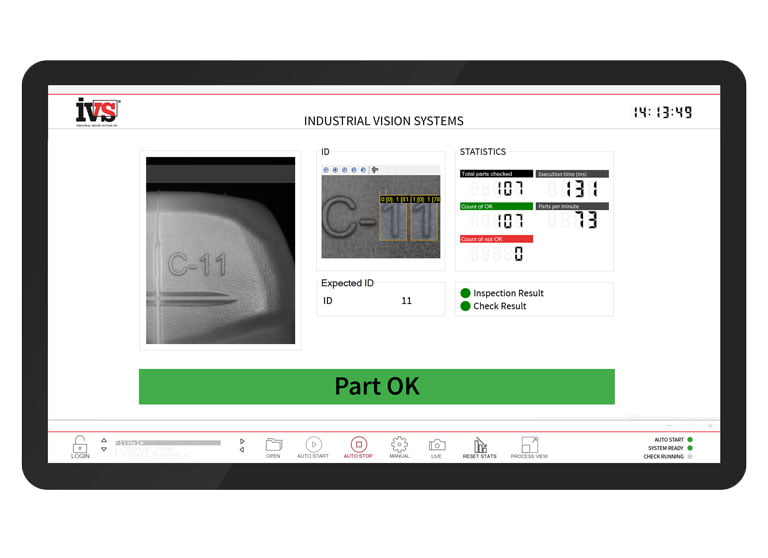

All these questions go on in the mind of the quality director and engineering manager. So the answer is to integrate vision systems at the same time as the deployment of robotic automation. This can be in the form of robotic vision or as a separate stand-alone vision inspection process. This guarantees the process has been completed and becomes the eyes of the robot. Quality concerns relating to the build level, presence of components, correct fitment of components and measuring tolerances can all be solved.

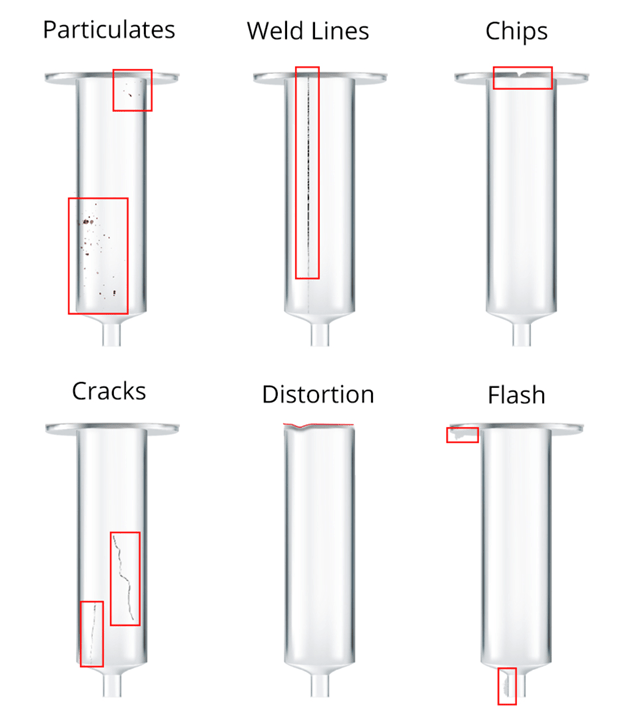

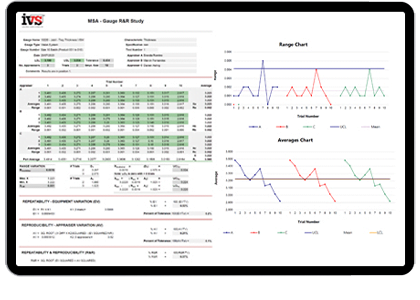

For example, a medical device company may automate the packing of syringe products. Perhaps the medical syringes are pre-filled, labelled and placed into trays, all with six-axis robots and automation. A machine vision system could be used following all these tasks to check the pre-fill syringe level is correct, that all components in the plastic device are fitted in the correct position and finally an automated metrology check to confirm the medical device is measured to tolerance automatically. And remember the quality supervisor and his team also created a tally chart of failures, well with modern vision systems you’ll get all the data automatically stored, batch information, failure rates, yield, as well as the images of every product before they went out the door for information and warranty protection.

So how can robots replace operators? It normally comes down to some of the simple tasks, moved over to robots, including:

- Robot Inspection Cells – robotics and vision combine to provide fully automated inspection of raw, assembled or sub-assemblies, replacing the tedious human inspection for the task.

- Robot Pick and Place – the ability for the robot to pick from a conveyor, at speed, replacing the human element in the process.

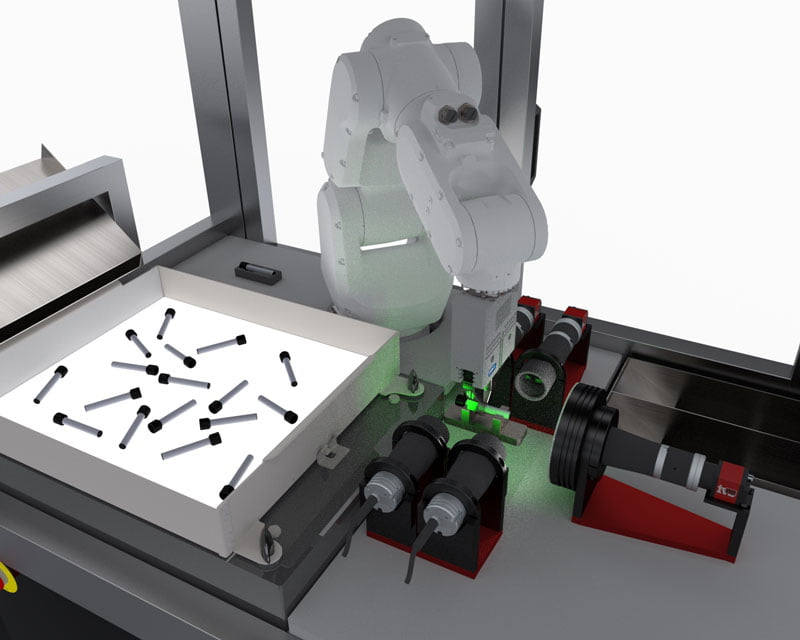

- Automated Bin Picking – By combining 3D vision with robot control feedback, a robot can autonomously pick from a random bin to allow parts to be presented to the next process, a machine tool loaded or even for handing to a human operator to increase the takt time for the line.

- Robot construction and assembly. A task which has been done for many years by robots, but the advent of vision systems allow the quality inspection to be combined with the assembly process.

- Industrial Automation – using vision systems to poke yoke processes to deliver zero defects forward in the manufacturing process, critical in a kanban, Just-in-Time (JIT) environment.

- Robot and Vision Packing – picking and packing into boxes, containers and pallets using a combination of robots and vision for precise feedback. Reducing the human element in packing.

So, maybe you can deploy a robotic vision system and industrial automation into your process to help solve your manufacturing problems. It’s a matter of capital investment in the first place in order to streamline production, increase productivity and reduce reliance on untrained or casual workers in the manufacturing process. Robotic vision systems and industrial automation can solve your manufacturing problems.